In the paper A Formally Verified NAT Stack, the authors describe a software-defined network function that is “semantically correct, crash-free, and memory safe.” That’s right: it has no bugs, it will never crash, and they can prove it! But how important is this?

For decades, formal verification has been lurking with a promise of helping us make better systems. Since the beginning of programming, there has been steady work to create languages, tools and systems to do formal verification, which is an analytic method of exhaustively proving the correctness of code, rigorously specifying mathematical models to ensure correct behaviour.

At the forefront of this effort was Sir C.A.R Hoare, inventor of the quicksort algorithm, the null reference, and lifelong labourer on formal specification languages like Z and CSP.

But formal verification has never really caught on in software, and in 1996, at a talk at a theoretical computer science workshop, Hoare conceded:

Ten years ago, researchers into formal methods (and I was the most mistaken among them) predicted that the programming world would embrace with gratitude every assistance promised by formalisation to solve the problems of reliability that arise when programs get large and more safety-critical.

Programs have now got very large and very critical – well beyond the scale which can be comfortably tackled by formal methods. There have been many problems and failures, but these have nearly always been attributable to inadequate analysis of requirements or inadequate management control. It has turned out that the world just does not suffer significantly from the kind of problem that our research was originally intended to solve.

https://en.wikipedia.org/wiki/Tony_Hoare#cite_note-26

This begs the question, what is the problem that their research was intended to solve?

In product development there are two sides of “correctness”:

- Validation – did we build the right thing? Did we build something that people want to use?

- Verification – did we build it the right way? Have we made the thing we were trying to make?

Build the right thing; build the thing right…

As Ousterhout writes in A Philosophy of Software Design, “the most fundamental problem in computer science is problem decomposition: how to take a complex problem and divide it up into pieces that can be solved independently.” This is the essence of analysis. You would be forgiven for thinking, as many did during Hoare’s time, that analytic approaches would be successful in managing software development.

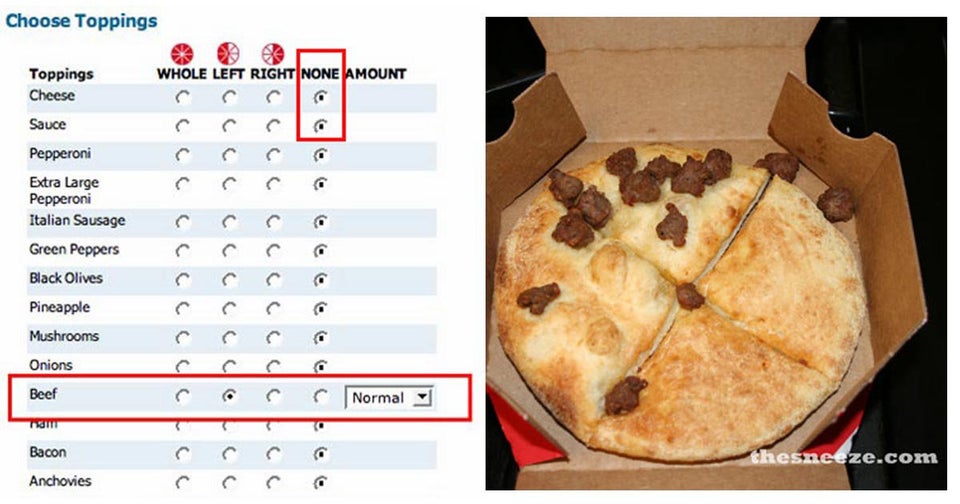

In terms of validation, we have seen a number of spectacular failures come out of analytic management approaches like Waterfall. We have seen a lot of software that technically works, but doesn’t meet the user’s needs:

So over the years, we learned to do validation differently.

We created processes that continuously check our work using empirical approaches – methods designed to integrate the testing of assumptions throughout the lifecycle of the product. A few years after Hoare’s speech, this was first articulated as the Agile Manifesto, and we have been building on it ever since then. I like to call these approaches Synthetic Management practices (synthetic means, “finding truth by recourse to experience”).

What about verification?

The book Software Engineering at Google describes the difference between programming (development) and software engineering (development, modification, maintenance). Software engineering is programming integrated across people and over time. I love this quote that highlights the difference: “It’s programming if ‘clever’ is a compliment, it’s software engineering if ‘clever’ is an accusation.” Being correct in the moment is a lot different than scaling correctness across people and over time, allowing the system to change but still be correct.

- To scale correctness, we need systems of verification, and here we turn heavily to the synthetic approaches. We manage our code using unit tests, functional tests, integration tests, build systems, ci/cd, and so on. Over the years, we have replaced manual QA processes with programmatic forms of verification that operate continuously throughout the lifecycle of our work. These are the systems of software engineering we use to enable a codebase to change reliably across many people over a long time.

So when it comes to verification techniques, we need to consider not whether a technique works, but whether the technique can be used as part of an organizational system of engineering.

Does formal verification have a place in systems of software engineering?

From the paper:

The proof of correctness of the NAT combines theorem proving for data structures code (stateful) and symbolic execution for the rest of the code (stateless). Even though theorem proving requires a lot of human effort, NF developers can reuse the verified data structures for many NFs. Symbolic execution is automated, and easy to run on a new NF

A Formally Verified NAT Stack, p. 9

There are two key points to make here:

- Formal verification is squarely analytic. We would need to decompose the problem upfront, and then use one-off manual processes to create code that is ‘eternally’ correct. This does not comport with the continuous, synthetic style of verification that has become so successful.

- To pull off the formal verification, a lot of manual effort goes into proving the data structures and then after the proving, the components become ossified, unchangeable. The code is perfect, so long as it doesn’t need change. It’s correct until we need to add new features. And then we have to do the whole “theorem proving” part again. That part is very hard.

The idea that we can reuse data structures without changing them is naïve. Write once, run forever? The real world doesn’t work that. Code needs to breathe, and the air it breathes comes through the winds of change. (ok, maybe that’s a little too much metaphor).

In other words, formal verification does not scale with systems of software engineering. It violates the principle that makes synthetic verification so effective: become part of the system. Formal proof lives outside the code, outside the system – it does not “take advantage” of the medium of code.

What to make of formal verification then?

Systems built using the medium of code need programmable proof that lives and executes with the code. Tests are easy to implement as part of the system, and so they have become the primary method of demonstrating “correctness”. Software is synthetic and therefore lends itself to synthetic proof.

But as Bentley says in Programming Pearls: formal proofs may not become organizationally useful in software, but they have improved and evolved our understanding of how to write good code. For learning about algorithms and expanding our collective understanding of computer science, formal proofs have value. So yes, there is a place for formal proof in software, just not in the software industry.