This article was originally published in full at InfoQ on Jan 6th, 2020

Key Takeaway:

Synthetic systems need to be managed using synthetic practices. The history of building software systems is a story of discovering and formalizing synthetic ways of working into practices that guide our unique process of creation.

A new kind of proof

Long before machine learning algorithms beat the world champions of Go, before searching algorithms beat the grandmasters of Chess, computers took their first intellectual victory against the human mind when they were used to solve the Map Problem.

The solution was the first time a significant achievement in the world of pure mathematics was found using a computer. It startled the world, upending a sacred belief in the supremacy of the human mind, while revealing a new pattern of digital problem solving.

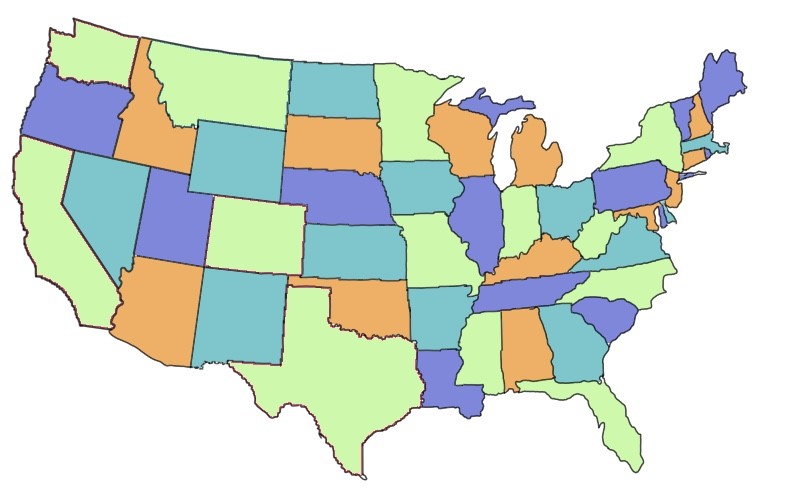

The Map Problem asks how many colours are needed to colour a map so that regions with a common boundary will have different colours.

Simple to explain, easy to visually verify, but difficult to formally prove, the Map Problem captured the imagination of the world in many ways. At first, for the elegant simplicity of its statement, and then, for over a hundred years in the elusiveness of its solution. Finally, for the brutal yet novel approach used to produce the result.

The Map Problem is foundational in graph theory, since a map can be turned into a planar graph that we can analyze. To solve the problem, a discharging method can be used to find reducible graph configurations, the reduced configurations can be restricted to unavoidable sets, and the unavoidable sets can be tested to see how many colours are actually needed.

If we are able to test every possible unavoidable set and find that only four colours are required, then we will have the answer. But the act of reducing and testing sets by hand is extremely laborious, perhaps even barbaric. When the Map Problem was first proposed in 1852, this approach could not possibly be considered.

Of course, as computing power grew, a number of people caught on to the fact that the problem was ripe for exploitation. We can create algorithms that have the computer do the work for us – we can programmatically see if any counterexample exists where a map requires more than four colours. In the end, that’s exactly what happened. And so we celebrated: four colours suffice! Problem solved?

These are not the proofs you are looking for

Mathematics is analytical, logical. When examining a proof, we want to be able to work through the solution. With the Map Problem, mathematicians desired just such a proof, something beautiful, something that reveals a structure to explain the behavior of the system, something that gives an insight into the inner workings of the problem.

When the solution was presented at the 1976 American Mathematical Society summer meeting, the packed room was nonplussed. They did not get something beautiful – they instead got printouts. Hundreds and hundreds of pages of printouts, the results of thousands of tests.

Was this even a proof? The conference was divided. They said the solution was not a proof at all, but rather a “demonstration”. They said it was an affront to the discipline of mathematics to use the scientific method rather than pure logic. Many were unprepared to relinquish their life’s work to a machine.

The anxiety over the solution to the Map Problem highlights a basic dichotomy in how we go about solving problems: the difference between analytic and synthetic approaches.

The solution to the Four Color Theorem is synthetic. It arrives at the truth by exhaustively working through all the discrete cases, allowing us to infer the result via absence of a counter-example. It is empirically-derived, using the ability computers for programmatic observation – encoded experiences, artificial as they may be. It is a break from the past, with no proof in the traditional, analytic sense – if you want to check the work, you need to check the code.

And this is exactly how we solve all problems in code. We use a synthetic approach of creation and verification:. We leverage the unique capabilities afforded by the medium of code to test, observe, experience, and “demonstrate” our results as fast as possible. This is software.

Software is synthetic

Look across the open plan landscape of any modern software delivery organization and you will find signs of it: a way of thinking that contrasts sharply with the analytic roots of technology.

Near the groves of standing desks, across from a pool of information radiators, you might see our treasured artifacts – a J-curve, a layered pyramid, a bisected board – set alongside inscriptions of productive principles. These are reminders of agile training past, testaments to the teams that still pay homage to the provided materials, having decided them worthy and made them their own.

What makes these new ways of working so successful in software delivery? The answer lies in this fundamental yet uncelebrated truth – that software is synthetic

Our’s are not the orderly, analytic worlds that our school-age selves expected to find. Full of complexity and uncertainty, we need a different to find truth.

We have become accustomed to working on systems that yield to analysis. Where we find determinism, we can get real value from deep examination and formal verification. Since we can’t use a pull request to change the laws of physics (and a newer, better version of gravity will not be released mid-cycle), it’s worth spending the time to plan, analyze, and think things through.

But how work “works” in a synthetic system is different. We need approaches that control the indeterminism and use it to our advantage. When we learn to see this – when we shift our mental models about what the path to truth looks like – we start to make better decisions about how to organize ourselves, what practices to use, and how to be effective with them.

Software development offers a unique ability to get repeatable value from the uncertainty of open-ended synthesis, but only if we are willing to abandon our compulsion to analyze.

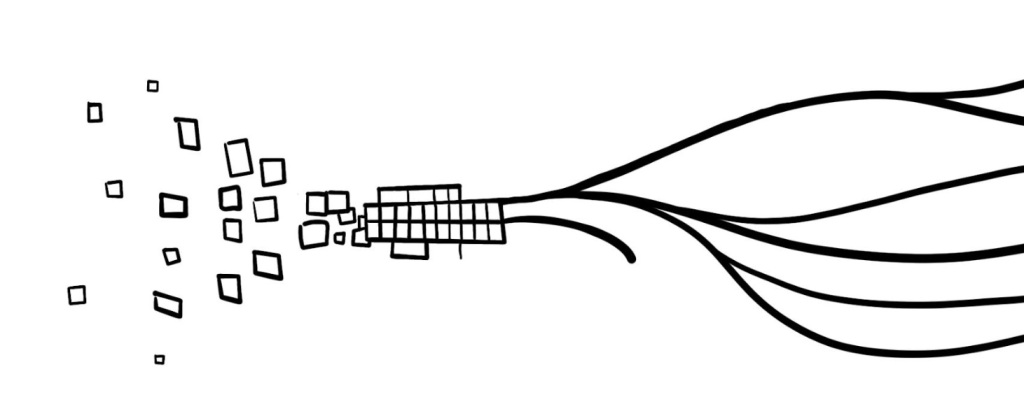

There is no other medium in the world with such a direct and accessible capability for generating value from emergent experiences. In code we weave together ideas and intentions to solve our very real problems, learning and uncovering possibilities with each thread, allowing knowledge to emerge and pull us in different directions.

The synthetic way of working shapes the daily life of development, starting with the most basic action of writing code and moving all the way up into the management of our organizations. To understand this, let’s work our way through the process.

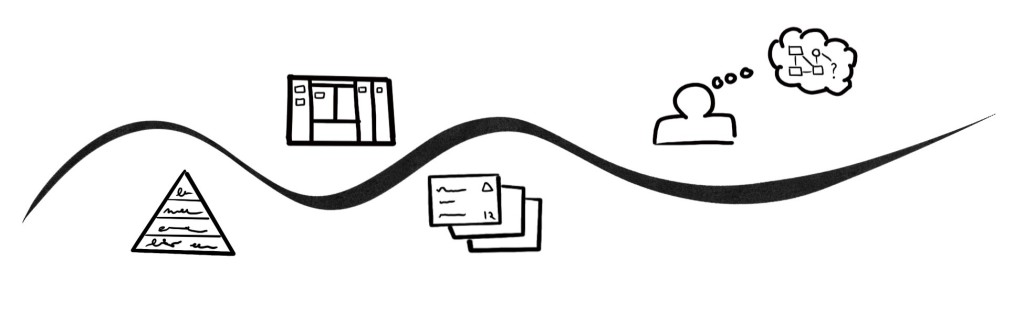

1. Will it work?

“… Most programmers think that with the above description in hand, writing the code is easy. They’re wrong. The only way you’ll believe this is by putting down this column right now and writing the code yourself. Try it.”

on “The Challenge of Binary Search” – Programming Pearls, p. 61

Our process of creation is the practice of bringing together millions of intangible elements. With each piece we add, we have the opportunity to create something new, with both beauty and function.

We start with a set of discrete primitives, elements, and structures. We use structure and flow to bring them together into algorithms. In this coupling we produce new elements, discrete in their own right, gathering new properties and capabilities. Arranging algorithms, we create runtimes and libraries, scripts and executables. The joining of these brings forth systems – programs and applications, infrastructure and environments. From these we make products, give birth to businesses, create categories, and build entire industries.

Our understanding of the systems we are building emerges and changes throughout the process of creation (this is the original definition of technical debt, by the way). We accept that we cannot truly know our system until we experience it: we learn from the understanding that rises bit by bit, commit by commit, as we watch our ideas coming to life. “Will it work?”

To answer this question, we write the code, we run it, we test it: we go and see.

This process of creation demands verification through experience. This is how we find the truth of our assumptions – writing and running code. Getting our hands dirty. This is the fundamental organizing principle in our process of creation.

In a synthetic system, the first thing we need to do is to experience our work to verify our inferences. As we give instructions to the system, we need to give the system the opportunity to teach us. The greater the uncertainty of our synthesis, the more we have to learn from our experiences.

So we optimize our daily routines for this kind of learning. Test driven development, fast feedback, the test pyramid, pair programming; these are all cornerstones of good software development because they get us closer to experiencing the changes we make, in different ways, within the context of the whole system. Any practice that contributes to or adds dimensions on how we experience our changes will find itself in good company here.

2. Does it still work?

As a system grows to include large numbers of components and contributors, it’s dynamics become increasingly unpredictable. When we make a change, there is no amount of analysis that will tell us with certainty whether it will work exactly as intended.

We are always walking at the edge of our understanding. We are working our way through a complicated networked system, a web of converging intentions. Changes to one part will routinely have unintended consequences elsewhere. Not only do we need to test our changes, but we need to know if we broke anything else. So how do we answer the question, “Does it still work?“

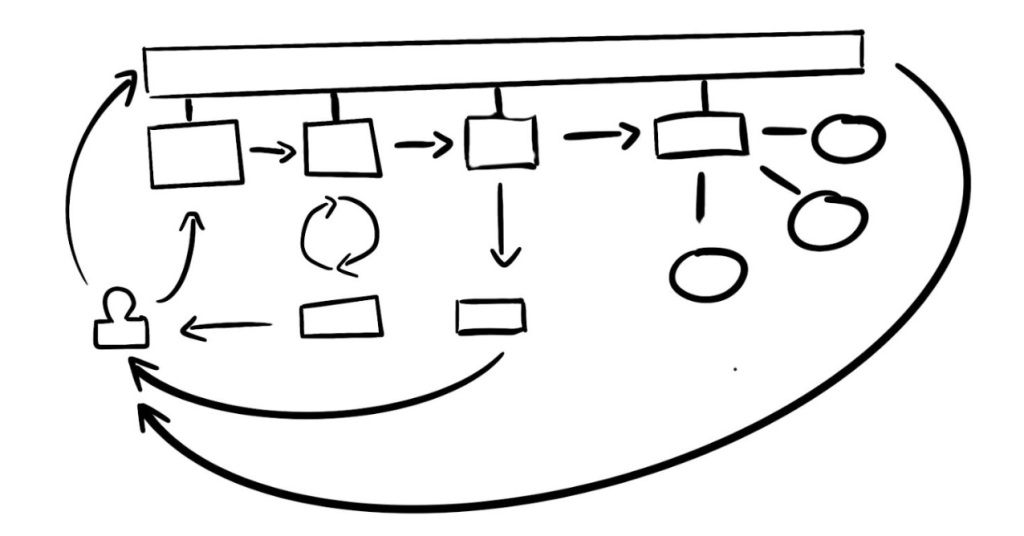

To answer this question, we push the synthetic approach across the delivery lifecycle and into adjacent domains.

We can do this continuously: we can automate it. In the medium of code, the “experiencing” is cheap and readily available to us. We externalize our core workflow and use support systems to validate our intentions every time we make a change.

We create proxies for our experience – systems that “do the experiencing” for us and report back the results. For example,

- We use automated testing to run automatically every time we make a change to any part of the code base.

- We use continuous integration to verify our intentions and get a shared view of our code running as a change to the entire system.

- We use continuous delivery to push our changes into production-like, source-controlled environments.

- We use our pipelines to maintain consistency and provide fast feedback as our work moves into progressively larger boundaries of the system.

The last decade has seen an ever-expanding set of these practices to externalize and proxy our individual experiences: test automation, repeatable builds, continuous integration, continuous delivery, and so on.

We put many of these under the banner of DevOps, which we can understand simply as synthetic thinking working its way across the value chain. The concept has proven itself so useful that our industry has developed a compulsion to extend the concept further and further: DevSecOps, DevTestOps, BizDevOps, NetDevOps, etc.

And it makes sense why we would want to create these new ontologies, these combined categories of understanding. We want to build a single view of what it means to be “done” or to “create value”, one that combines the different concepts that our disciplines use to experience the world. These are examples of our industry looking to extend what is intuitively useful: the capability to apply synthetic thinking across the software value stream.

3. Why doesn’t it work?

Once we have our software running, we need to extend our synthetic way of working one more time – into the observability of our system. Our primary goal when building is to ensure that things are simple and comprehensible so we can go on-call for them in production.

But as our system grows, services inevitably grind together like poorly fit gears. Intentions conflict, assumptions unravel, and the system operates in increasingly unexpected ways.

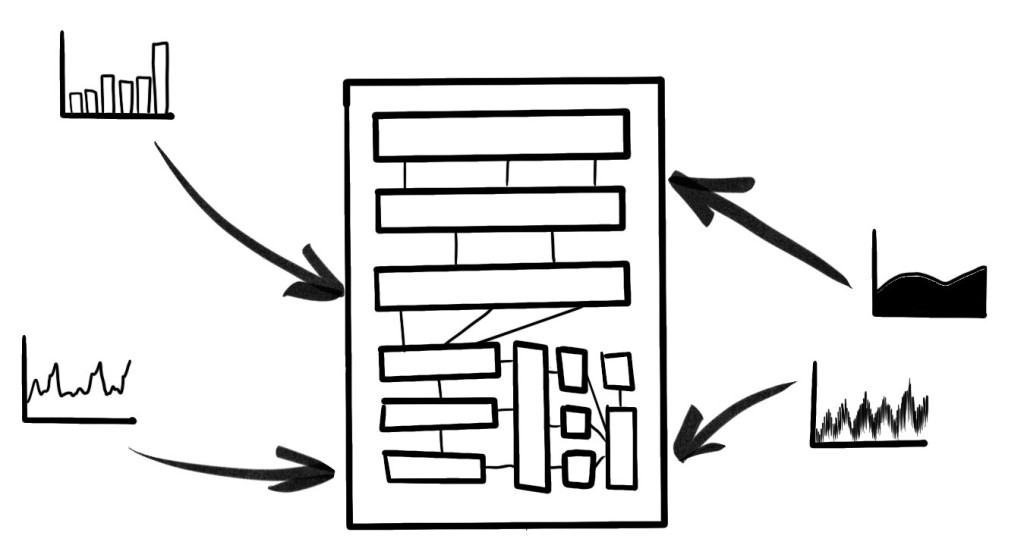

There is nothing to see, touch or hear, so we have the very difficult task of inferring what is happening from whatever signals we have available. we have learned to use practices that allow us to see deeply into the system, detect anomalies, run experiments, and respond to failure.

Observability is required when it becomes difficult to predict the behavior of a system and how users will be impacted by changes. In other words, to ensure that our interactions with system phenomena align with customer experiences.

Making the system observable involves a practice of combining context, information, and specific knowledge about the system to create the conditions for understanding. The investment we make is in our capability to integrate knowledge – the collection of logs, metrics, events, traces and so on, correlated to their semantics and intent. We approach these problems empirically too, using experiments to discover, hypothesize and validate our intuitions.

Planning for failure does NOT mean thinking through all failure scenarios, as that would be impossible. Instead we need to think about how we can build a system that allows us to cope with surprise and learn from our environment.

Chaos engineering also embraces the experimental approach – rather than trying to decompose the system and try to decipher all its faults, let’s get our hands dirty. Let’s inject faults into the system and watch how it fails. Inspect, adapt, repeat. This is an experience-driven approach that has seen a lot of success building scaled software systems.

With operational practices like these, we embrace the fact that our systems are complex and our work will always carry dark debt, no matter how good the code coverage is. Our best recourse is to give ourselves the ability to react quickly to unexpected events or cascading changes.

The solution to the Map Problem was impactful because it changed the world’s understanding of what we consider to be “verifiable information”. It showed us new ways to discover things about the world, and it did so by using the newly emerging synthetic approach to solving problems in the medium of code. But it also required a generation of mathematicians to update their understanding of what it means to “solve” a problem.

In software engineering, the separation has been less explicit. We have, unknowingly, been shifting the model of how to solve problems slowly towards the synthetic.

To understand why we are successful with the approaches we use, we need to be explicit about the way we are solving them. That is why the recognition of the synthetic nature of software is so important.

This is not the end of the story though. Though our systems are cobbled together from the combination of millions of tiny pieces, they hang together under the gravity of people and their intentions. “As a user …” Those intentions come into conflict, and the conflicts only become visible when put things together.

That I call Synthetic Management, which is part 2 of this article.